Are you looking for ways to market your small business website with a limited budget?

Whether it’s with established sites such as Google and Facebook, or newer outlets like Pinterest, there are plenty of options available to promote your site.

There are at least 30 ways to market your website with a time investment and no credit card required. Some of these are oldies but goodies, while others are newer and exciting avenues you may not have tried out yet.

Here are 30 things you can do today to get started marketing your website for free.

- Press releases still work. Granted a submission to PRWeb or a Vocus account make the pickup and link benefit much easier, but those cost dollars – so for this article lets reiterate the best free press release sources:

- 24-7PressRelease.com

- PRLog.org

- IdeaMarketers.com

- Send the press release to your local media outlets, or any niche media outlets that may be interested in what you do.

- Claim, verify, and update your Google Local Business listing. This is extremely important. Google Local Listings have been absorbed into Google+, so be sure to check out this great resource over at Blumenthals.com to keep up to date on how to manage your Google Local Listing.

- Find a niche social media site that pertains to your exact business and participate. Be helpful, provide relevant and useful information, and your word of mouth advertising will grow from that engagement.

- Examples:

- Travel or hospitality business – Tripadvisor.com forums

- Photography store – Photo.net or RockTheShotForum.com

- Wedding Planning or Favor site – Brides.com or Onewed.com forums

- Search your niche or service plus forums to find ideas. If there isn’t a forum out there, consider starting one.

- Examples:

- Build a Google+ page for your business and follow businesses that are related to your product or service niche. Share informative and relative content and link to your profile from your website. You should also consider allowing users to +1 your content on a page by page basis.

- Setting up joint benefit with local businesses or others in your niche can help you reach eyes you never did before. Be sure to answer the question "Will my user find this information beneficial as they shop and purchase?" every time you link to a resource, or request a link or listing on another site.

- Comment and offer original, well thought out, sensible information, opinion and help on blogs that are relevant to your website's topic and be sure to leave your URL. Even if a nofollow tag is attached, you could gain a bit of traffic and some credibility as an authority on the subject matter. This is not blog comment spamming, this is engaging in a conversation relevant to your website's topic.

- Set up and verify a Webmaster Central Account at Google.

- Set up a Bing Webmaster Tools account and verify it.

- Update or create your XML sitemap and upload it to Google Webmaster Tools and Bing Webmaster Tools.

- Write a "how-to" article that addresses your niche for Wikihow.com or Answers.com. This is kind of fun and a good resource for getting mentions and links. Looking at your product or service in a step-by-step manner is often enlightening in several ways. It can help you better explain your products and services on your own website. I will say I don’t know why some of these sites still rank well, many of them are junk. I do like most of the answers on the two sites mentioned above. Be picky with where you participate.

- Write unique HTML page titles for all of your pages. This is still extremely important, don’t skimp on this one.

- Share your photos at Flickr – get a profile, write descriptions, and link to your website. Don't share photos you don't own or have permission to use.

- Start a blog. There's nothing wrong with getting the basics of blogging down by using a free service from Blogger or WordPress.

- Make sure your Bing and Yahoo Local listings are up to date.

- Update and optimize your description and URL at YP.com. They'll try to get you to spend money on an upgraded listing or some other search marketing options. Don't bother with that, but make sure the information is accurate and fresh.

- Use your Bing Webmaster Tools account to look at your incoming links. How do they look? Are all of the sites relevant and on-topic? If not, reevaluate your link building practices and start contacting any of the irrelevant sites you can and ask them to take down your link. A clean and relevant incoming link profile is important; cleaning up bad links is a necessity until we can tell Google and Bing which links we want them to ignore.

- Make a slideshow of your products or record an original how-to video and upload to YouTube. Be sure to optimize your title and descriptions. Once it's uploaded, write a new page and embed the video on your own Web site. Add a transcription of the video if possible.

- Try a new free keyword tool for researching website optimization, then see #20.

- Add a page to your site focused on a top keyword phrase you found in #19.

- Build a Facebook Page and work to engage those that are interested in your product or service. Facebook is so much more robust than it ever was! Create groups, events, and photo albums. Link to your Facebook profile from your site and allow visitors to your site to like and share your content.

- Install Google Analytics if you don’t have any tracking software. The program is pretty amazing and it's free. You need to do this if you haven’t already. It's that important.

- Start Twittering or start doing it much better than you are now – it's a great way to network with like-minded individuals.

- Pinterest is hot right now. If you have visually stimulating content that is relevant to the site's demographic, you can find great success right now. Be sure you're using solid practices for marketing on Pinterest as you get started.

- Create a new list in Twitter and follow profiles of industry experts you know and trust. Use this as your modern feed reader. I don’t use RSS feed readers anymore. I like content that has been vetted by my peers and is worthy of a tweet or two.

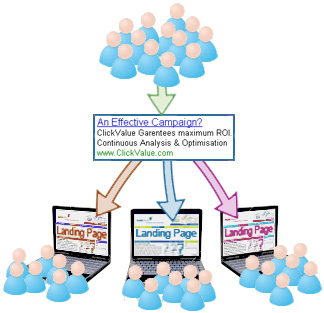

- Try a new way to write an ad for a struggling PPC ad group or campaign.

- Review your Google Analytics In-Page insights and take note of how users are interacting with your page. Where to they click, what is getting ignored. Make changes based on this knowledge.

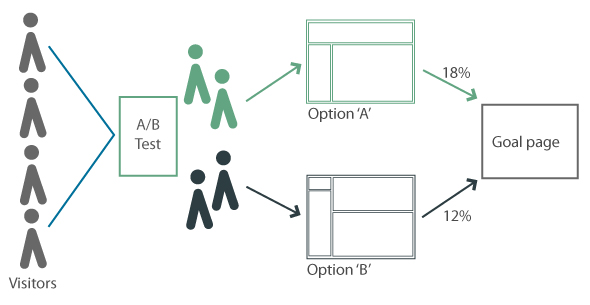

- Set up a Google Content Experiment through your Analytics account and test with the information you obtained and changes you made in number 27.

- Build a map at Google Maps and add descriptions for your storefront, locations, and nearby useful points of interest. Make your map public and embed it on your own website. Add links back to relevant content on your site if possible to each point of interest.

- Keep reading Search Engine Watch for more free tips and tricks.

There you have it – 30 ways to market your website. Get to work and make something happen! There's no reason to say you can't be successful because you don’t have a huge advertising budget. Time is all you need.

Sources : http://searchenginewatch.com/article/2048588/30-Free-Ways-To-Market-Your-Small-Business-Site